Today I learned about Firefox troubleshooting mode.

Category Archives: Sys Admin

Fast website

There are some tips on making a website fast in this video: How is this Website so fast!?

Old Book Teardown #10: Digital Systems: Hardware Organization and Design (1973) | In The Lab

This post is part of my video blog and you can find more information about this video over here.

You can support this channel on Patreon: patreon.com/JohnElliotV

Silly Job Title: Component Wrangler

In this video I take a look at Digital Systems: Hardware Organization and Design by Fredrick J. Hill and Gerald R. Peterson published in 1973:

- archive.org

- Amazon USA (3rd Edition, 1991)

- Wiley (3rd Edition, 1991)

Here is the laundry list of links to things which came up during this video, including a few duplicates:

- EEVblog Electronics Community Forum – Index

- Forrest Mims’ Maverick Scientist – Hardcover Limited Edition (signed copies!)

- Make: Maverick Scientist: My Adventures as an Amateur Scientist

- EEVblog 1640 – Mailbag: 4k Microscope, Panaplex Displays, Piezo Singing, RF magic – EEVblog

- Code: The Hidden Language of Computer Hardware and Software : Petzold, Charles: Amazon.com.au: Books

- SERIAL PORT COMPLETE SECOND EDN: COM Ports, USB Virtual COM Ports, and Ports for Embedded Systems : AXELSON, J: Amazon.com.au: Books

- Usb Embedded Hosts: The Developer’s Guide : AXELSON, JAN: Amazon.com.au: Books

- USB Mass Storage: Designing and Programming Devices and Embedded Hosts : Axelson, Jan: Amazon.com.au: Books

- Usb Complete 5th Edn: The Developer’s Guide : AXELSON, JAN: Amazon.com.au: Books

- Instruction pipelining – Wikipedia

- IBM System/370 – Wikipedia

- direct memory access at DuckDuckGo

- Direct memory access – Wikipedia

- Content-addressable memory – Wikipedia

- computer tape technology at DuckDuckGo

- Diode–transistor logic – Wikipedia

- Compiler – Wikipedia

- carry completion adder at DuckDuckGo

- USB 3.0 Internal Connector Cable Specification

- USB 3.0 – Wikipedia

- USB3 Cables and Connectors Compliance Document

- Electronics Australia – Wikipedia

- Silicon Chip – Wikipedia

- Silicon Chip Online

- university of arizona at DuckDuckGo

- University of Arizona – Wikipedia

- University of Arizona in Tucson, AZ

- university of pennsylvania at DuckDuckGo

- University of Pennsylvania – Wikipedia

- University of Pennsylvania

- duquesne university at DuckDuckGo

- Duquesne University – Wikipedia

- Duquesne University

- fredrick j. hill at DuckDuckGo

- gerald r. peterson at DuckDuckGo

- Mini Projects – John’s wiki

- JMP001 – John’s wiki

- Karnaugh map – Wikipedia

- Introduction to switching theory and logical design : Hill, Fredrick J : Free Download, Borrow, and Streaming : Internet Archive

- apl programming language at DuckDuckGo

- APL (programming language) – Wikipedia

- diode logic at DuckDuckGo

- Diode logic – Wikipedia

- diode transistor logic at DuckDuckGo

- Diode–transistor logic – Wikipedia

- flip flop digital at DuckDuckGo

- Flip-flop (electronics) – Wikipedia

- random access memory at DuckDuckGo

- Random-access memory – Wikipedia

- semi random access memory at DuckDuckGo

- read only memory at DuckDuckGo

- Read-only memory – Wikipedia

- microprogramming at DuckDuckGo

- Microcode – Wikipedia

- computer interrupt at DuckDuckGo

- Interrupt – Wikipedia

- ripple-carry adder at DuckDuckGo

- minimum delay adder at DuckDuckGo

- Adder (electronics) – Wikipedia

- carry look ahead adder at DuckDuckGo

- Carry-lookahead adder – Wikipedia

- carry completion adder at DuckDuckGo

- Early completion – Wikipedia

- carry save multiplier at DuckDuckGo

- Carry-save adder – Wikipedia

- floating point at DuckDuckGo

- Floating-point arithmetic – Wikipedia

- IEEE 754 – Wikipedia

- why is self modifying code a bad idea at DuckDuckGo

- Self-modifying code – Wikipedia

- charles babbage at DuckDuckGo

- Charles Babbage – Wikipedia

- analytical engine at DuckDuckGo

- Analytical engine – Wikipedia

- difference engine at DuckDuckGo

- Difference engine – Wikipedia

- punch card jacquard at DuckDuckGo

- Jacquard machine – Wikipedia

- Punched card – Wikipedia

- automatic sequence controlled calculator at DuckDuckGo

- Harvard Mark I – Wikipedia

- ENIAC at DuckDuckGo

- ENIAC – Wikipedia

- univac 1 at DuckDuckGo

- UNIVAC I – Wikipedia

- john von neumann at DuckDuckGo

- John von Neumann – Wikipedia

- princeton university at DuckDuckGo

- Princeton University – Wikipedia

- Home | Princeton University

- word length at DuckDuckGo

- Word (computer architecture) – Wikipedia

- ALU at DuckDuckGo

- Arithmetic logic unit – Wikipedia

- CPU at DuckDuckGo

- Central processing unit – Wikipedia

- microprocessor at DuckDuckGo

- Microprocessor – Wikipedia

- high level languages at DuckDuckGo

- High-level programming language – Wikipedia

- Fortran – Wikipedia

- ALGOL – Wikipedia

- COBOL – Wikipedia

- PL/I – Wikipedia

- APL (programming language) – Wikipedia

- assembler at DuckDuckGo

- Assembly language – Wikipedia

- interpreter computer programming at DuckDuckGo

- Interpreter (computing) – Wikipedia

- compiler at DuckDuckGo

- Compiler – Wikipedia

- bytecode at DuckDuckGo

- Bytecode – Wikipedia

- jit compiler at DuckDuckGo

- Just-in-time compilation – Wikipedia

- sign magnitude at DuckDuckGo

- Signed number representations – Wikipedia

- ones complement at DuckDuckGo

- Ones’ complement – Wikipedia

- two’s complement at DuckDuckGo

- Two’s complement – Wikipedia

- memory overlay at DuckDuckGo

- Overlay (programming) – Wikipedia

- magnetic core memory at DuckDuckGo

- Magnetic-core memory – Wikipedia

- MMIX at DuckDuckGo

- MMIX – Wikipedia

- Knuth: MMIX

- demorgan’s theorem at DuckDuckGo

- De Morgan’s laws – Wikipedia

- list of logic symbols at DuckDuckGo

- List of logic symbols – Wikipedia

- diode transistor logic at DuckDuckGo

- Diode–transistor logic – Wikipedia

- transistor transistor logic at DuckDuckGo

- Transistor–transistor logic – Wikipedia

- MOS logic at DuckDuckGo

- MOSFET – Wikipedia

- CMOS – Wikipedia

- ecl logic at DuckDuckGo

- Emitter-coupled logic – Wikipedia

- dynamic random access memory at DuckDuckGo

- Dynamic random-access memory – Wikipedia

- static ram at DuckDuckGo

- Static random-access memory – Wikipedia

- crypto timing attacks at DuckDuckGo

- Timing attack – Wikipedia

- bipolar junction transistor at DuckDuckGo

- Bipolar junction transistor – Wikipedia

- CMOS – Wikipedia

- Flip-flop (electronics) § SR NOR latch – Wikipedia

- hysteresis at DuckDuckGo

- Hysteresis – Wikipedia

- hard drive technology at DuckDuckGo

- Hard disk drive – Wikipedia

- computer tape technology at DuckDuckGo

- Magnetic-tape data storage – Wikipedia

- read only memory at DuckDuckGo

- Read-only memory – Wikipedia

- content addressable memory at DuckDuckGo

- Content-addressable memory – Wikipedia

- Flip-flop (electronics) § Gated SR latch – Wikipedia

- conways law at DuckDuckGo

- Conway’s law – Wikipedia

- sprocket holes at DuckDuckGo

- Film perforations – Wikipedia

- direct memory access at DuckDuckGo

- Direct memory access – Wikipedia

- IBM 360 at DuckDuckGo

- IBM System/360 – Wikipedia

- The IBM System/360 | IBM

- IBM 370 at DuckDuckGo

- The IBM System/370 | IBM

- vacuum tube at DuckDuckGo

- Vacuum tube – Wikipedia

- floating point normalization at DuckDuckGo

- Floating Point/Normalization – Wikibooks, open books for an open world

- large scale integration at DuckDuckGo

- Very-large-scale integration – Wikipedia

- SIMD at DuckDuckGo

- Single instruction, multiple data – Wikipedia

- cpu instruction pipeline at DuckDuckGo

- Instruction pipelining – Wikipedia

- illiac iv at DuckDuckGo

- ILLIAC IV – Wikipedia

- lifo stack at DuckDuckGo

- Stack (abstract data type) – Wikipedia

- CDC STAR at DuckDuckGo

- CDC STAR-100 – Wikipedia

Thanks very much for watching! And please remember to hit like and subscribe! :)

Following is a product I use picked at random from my collection which may appear in my videos. Clicking through on this to find and click on the green affiliate links before purchasing from eBay or AliExpress is a great way to support the channel at no cost to you. Thanks!

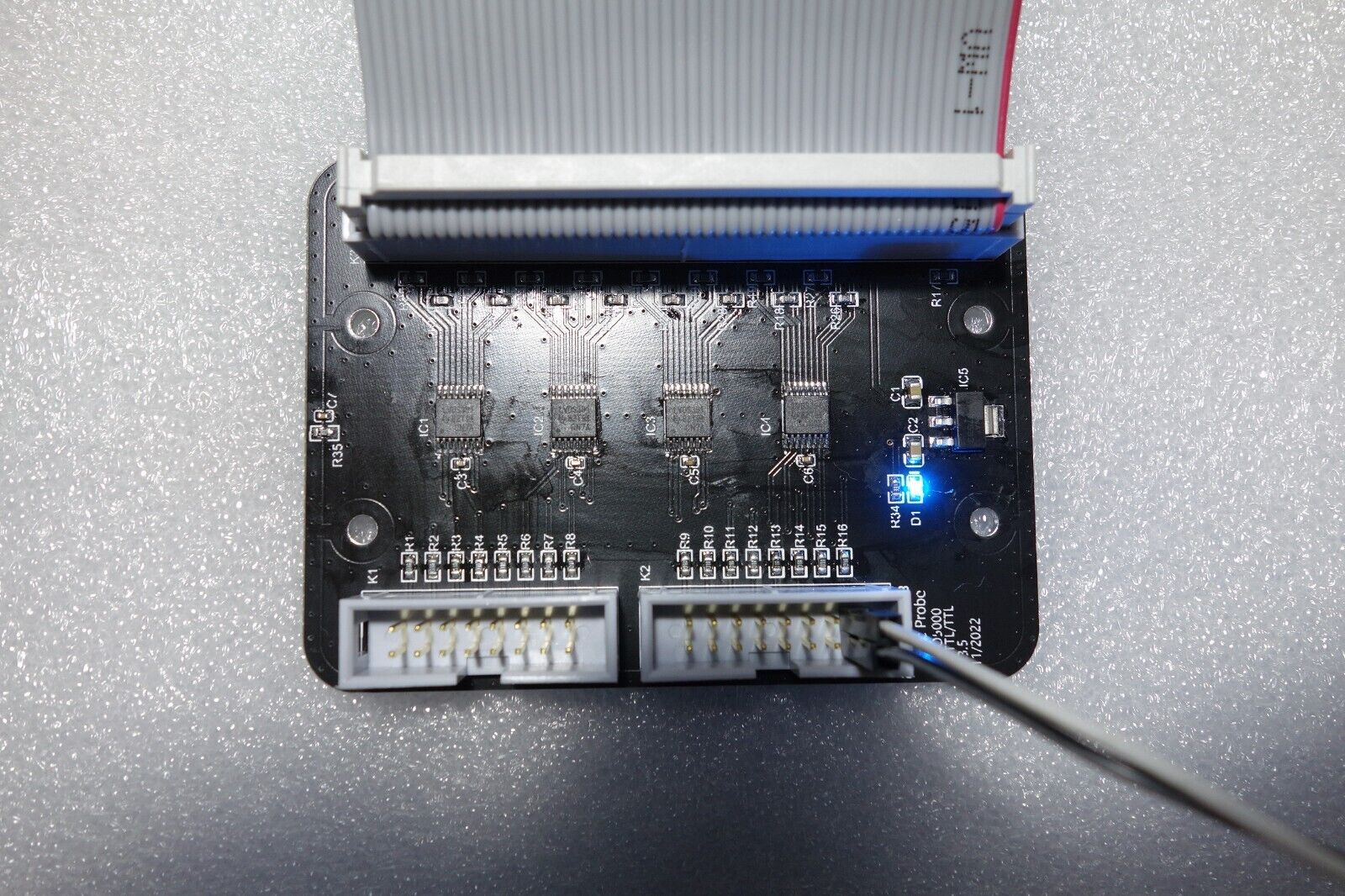

Rigol PLA2216 Compatible Logic Probe notes notes |

Let’s go shopping!

JMP001 Symbol Keyboard now on Debian Linux

Thanks to my mate @edk from IRC I learned of the compose key. I configured my KDE Plasma desktop to use Right Alt as my compose key in System Settings -> Input Devices -> Keyboard -> Advanced. Then I updated the firmware and deployed a .XCompose file that I generated, and now I have a symbol keyboard on my Debian Linux workstation!

ZNC

This is a reminder for Future John. Check out ZNC for IRC bouncing: https://wiki.znc.in/ZNC

Subversion @ GitHub

I wanted to use Subversion to checkout one of my GitHub repo branches, because an svn checkout only downloads the files it needs, not a full copy of every file ever added. But I discovered that GitHub sunset Subversion integration earlier this year. Sad face. Still, I suppose the economics justify that decision. As a consequence of my research, which was a bit sketchy because there is still heaps of documentation out there referring to the GitHub features which no longer exist, I did happen to learn about:

Kubuntu 24.04

I want to install some software to check it out (MySQL and MySQL Workbench) but I’m having trouble making it work on Debian. So I decided to spin up an Ubuntu instance for this job. I picked Kubuntu 24.04 (Ubuntu with KDE Plasma desktop) and this is my first go at using Ubuntu 24.04. Figured I should document the installation experience, which I have done here.

Getting info about recent core dump (on Debian)

This is a note for Future John about how to report a recent coredump (with debugging symbols) on Debian:

DEBUGINFOD_URLS="https://debuginfod.debian.net" coredumpctl gdb

Then bt is a magical gdb command to run to give you the call stack of the thread which… failed?

Disable HTTP gzip compression in W3 Total Cache

This is a note for Future John.

I recently installed W3 Total Cache for WordPress for my blog.

Then I started having this weird problem where sometimes when I loaded the blog home page I would see binary garbage rendered as text in my browser. I think the problem was either that the Content-Type header was being set incorrectly or that the data was being double gzipped.

On the second assumption I found this setting:

W3 Total Cache > General Settings > Browser Cache > Enable HTTP (gzip) compression.

I disabled HTTP (gzip) compression and now my page seems to be working correctly again. But I will need to keep an eye on it. If you have a problem accessing my blog, please let me know!

New WordPress plugins for the blog

I have been rolling out CloudFront for a few of my domains, including blog.jj5.net.

In order to integrate CloudFront with WordPress I used the W3 Total Cache plugin.

And in order to set the <link rel=”canonical”> element I used the Yoast SEO plugin.

At one point I had a problem with garbled content in my browser. Looked like the browser was trying to display compressed content as text. But now I can’t reproduce, so hopefully whatever the issue was it is now fixed…