This was suggested on #lobsters today:

$ curl -o /dev/null -w "Connect: %{time_connect} TTFB: %{time_starttransfer} Total time: %{time_total} \n" https://www.progclub.org/

This was suggested on #lobsters today:

$ curl -o /dev/null -w "Connect: %{time_connect} TTFB: %{time_starttransfer} Total time: %{time_total} \n" https://www.progclub.org/

This on Hacker News today: CSRF, CORS, and HTTP Security headers Demystified.

The above article referred to OWASP SameSite doco, and you can read about how to implement that with PHP.

I just wanted to get something that I’ve thought for many years on record, because I don’t think I’ve ever had the chance to discuss it much before, but I believe JSON web services (“REST APIs”) and web applications should deal only in two HTTP verbs, being: GET and POST. You use GET for queries and you use POST for submissions. All POST operations go through business logic for particular services and CRUDing URLs is a supremely bad idea, in my opinion. Just wanted to get that on record. Thanks. p.s for web applications you should 3xx on success, not 2xx on success; what you do for JSON web services is up to you, but for those 2xx is probably fine.

A fun read: Falsehoods Programmers Believe about REST APIs.

Today I learned about the Retry-After HTTP header. It was mentioned over here.

This popped up on r/programming today: HTTP(S) Benchmark Tools.

To disable the HTTP Referrer (Referer) header in Firefox open about:config and set network.http.sendRefererHeader to zero.

2017-12-09 jj5 – TODO: document this on my blog…

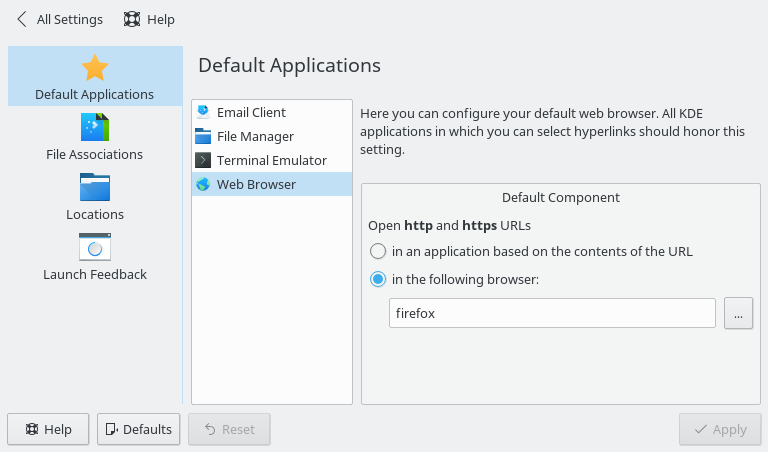

On Debian GNU/Linux 9.1 (stretch) when I try to open an *.desktop (application/x-desktop) link in a browser I get:

A folder named ~/.cache/kioexec/krun/13821_0/ already exists.

Searching for:

A folder named kioexec krun already exists

turned up diddly squat.

I solved the issue (for me) by changing:

System Settings -> Personalization -> Applications -> Default Application s-> Web Browser

from:

Open http and https URLs in an application based on the contents of the URL

to:

Open http and https URLs in the following browser: firefox

I had an issue with Apache2 improperly serving a JavaScript file which I seem to have fixed by making sure the file was terminated with a new-line character… this was really hard to diagnose and resolve! The behaviour in Firefox was that the file just didn’t finish to download, whereas the Apache2 logs indicated a 200 result… I think it may have had something to do with automatic compression, which is a dark art that I do not understand (mumbles something about mod deflate…).

Found this:

<Location /jira> RequestHeader unset Authorization ProxyPreserveHost On ProxyPass http://jiraserver/jira ProxyPassReverse http://jiraserver/jira </Location>

Over here. Wanted to keep a note of those settings.